Not ready for a demo?

Join us for a live product tour - available every Thursday at 8am PT/11 am ET

Schedule a demo

.svg)

No, I will lose this chance & potential revenue

x

x

Artificial Intelligence (AI) and Machine Learning (ML) are everywhere-from personalized recommendations to fraud detection and autonomous vehicles. Kubernetes has become the platform of choice for deploying these workloads at scale. But with great power comes great responsibility: securing AI/ML pipelines in Kubernetes presents new, unique challenges that every engineer, developer, and security professional should understand.

Whether you’re just starting with Kubernetes or you’re a seasoned pro, this blog will help you secure your AI/ML workloads with actionable tips, real-world examples, and the latest best practices.

Let’s face it: AI/ML workloads are juicy targets. They handle sensitive data, proprietary models, and often rely on open-source components that can introduce vulnerabilities. In 2024, a major breach at Hugging Face highlighted how attackers are now targeting AI infrastructure directly-stealing models, poisoning data, and exploiting misconfigurations.

What’s at stake?

Attackers can poison your models or sneak malicious code into your containers.

How to defend:

What’s at stake?

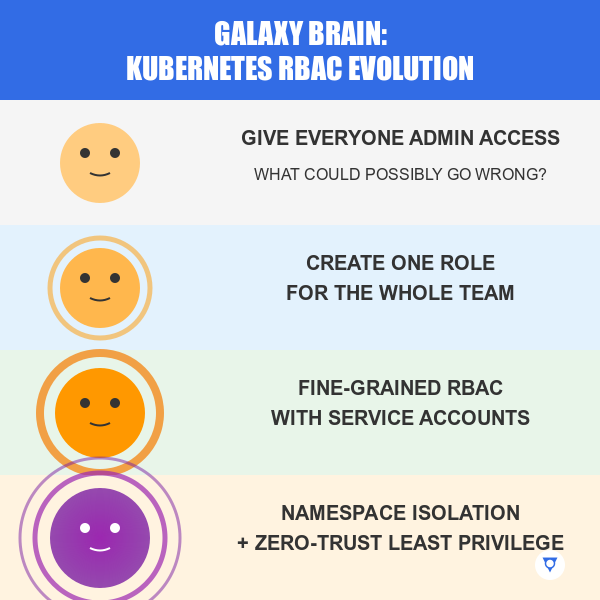

Over-permissioned service accounts can let attackers move laterally or escalate privileges.

How to defend:

What’s at stake?

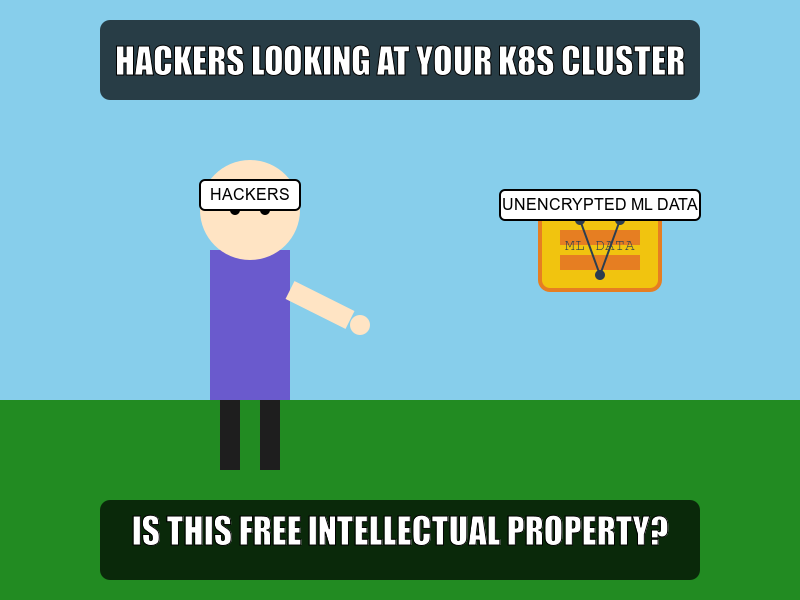

Sensitive training data or proprietary models can be stolen if not properly protected.

How to defend:

What’s at stake?

Traditional monitoring may miss subtle threats in dynamic AI/ML workloads.

How to defend:

What’s at stake?

Misconfigurations or open networks make it easy for attackers to exploit vulnerabilities.

How to defend:

What’s at stake?

AI/ML workloads face unique threats like data poisoning and model theft.

How to defend:

Here’s a quick checklist you can use today:

Securing AI/ML workloads in Kubernetes isn’t just for security teams-it’s everyone’s job. By following these best practices, you can protect your data, models, and infrastructure while empowering your team to innovate with confidence.

Ready to level up?

Try these strategies in your next project, and explore hands-on labs from AppSecEngineer to practice what you’ve learned. Your clusters-and your data scientists-will thank you!

.avif)

.png)

.png)

Koushik M.

"Exceptional Hands-On Security Learning Platform"

Varunsainadh K.

"Practical Security Training with Real-World Labs"

Gaël Z.

"A new generation platform showing both attacks and remediations"

Nanak S.

"Best resource to learn for appsec and product security"

.svg)

.svg)

.png)

.png)

Koushik M.

"Exceptional Hands-On Security Learning Platform"

Varunsainadh K.

"Practical Security Training with Real-World Labs"

Gaël Z.

"A new generation platform showing both attacks and remediations"

Nanak S.

"Best resource to learn for appsec and product security"

.svg)

.svg)

United States11166 Fairfax Boulevard, 500, Fairfax, VA 22030

APAC

68 Circular Road, #02-01, 049422, Singapore

For Support write to help@appsecengineer.com

.svg)