Not ready for a demo?

Join us for a live product tour - available every Thursday at 8am PT/11 am ET

Schedule a demo

.svg)

No, I will lose this chance & potential revenue

x

x

On November 30, 2022, ChatGPT burst onto the scene and captivated the world in an instant. Fast forward two years, and the AI universe is evolving at lightning speed—with fresh, groundbreaking innovations popping up almost daily. Among all its incredible uses, AI-driven code generation has consistently taken center stage. In fact, Google recently revealed that over 25% of its code is now crafted by AI, signaling a transformative leap in the future of automated programming.

Today, any John and Jane can generate hundreds of lines of code, but the real challenge lies in its security. With vulnerabilities on the rise—especially in AI-written code—the need for secure coding practices has never been greater.But here's where it gets interesting: AI comes to its own rescue. Today's language models are not only generate code—they also act as vigilant security guards, meticulously scanning and correcting vulnerabilities as they arise.

In this article, we'll dive into a few strategies for crafting secure code, walk through the actual code snippets that bring these methods to life, and even shed some light on the math behind the scenes (without getting too deep into the details!).

Traditional methods for code generation primarily depend on coder models that translate natural language instructions into code. These models typically:

However, this approach suffers from a significant drawback—it often favors degenerate solutions. For example:

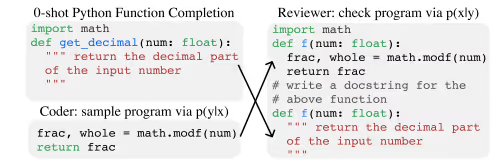

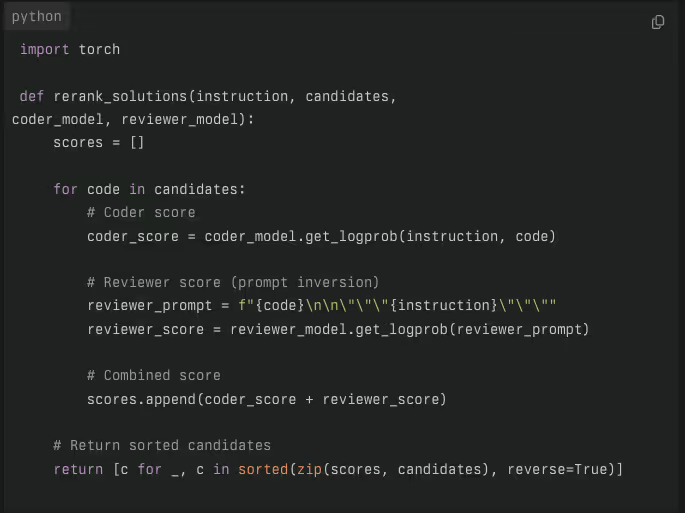

A dual-model framework that mimics human code review processes:

By combining the scores of both models— p(y∣x)⋅p(x∣y)—the method identifies solutions that satisfy both code correctness and instruction fidelity.( the product of the probability of the code being correct given the prompt and the prompt being correct given the code).This approach is a specific implementation of the Maximum Mutual Information (MMI) objective, a criterion that aims to maximize the amount of information shared between two variables and emphasizes solutions with high mutual agreement between the instruction and generated code.

LLMs typically output solutions as single-block implementations rather than decomposed logical units. This approach:

Example: For palindrome detection, GPT-3.5 might write a brute-force checker rather than modular functions for string reversal and symmetry analysis.

Traditional self-repair methods:

Experienced developers instinctively:

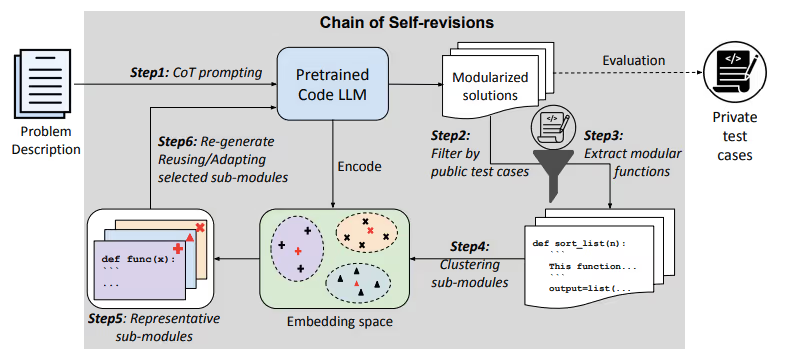

Planning the Process (CoT)

The model begins by thinking through the problem in steps, just as you might outline a plan before starting a project. It breaks down the overall task into smaller parts, making it easier to manage.

Building the Pieces (Sub-Modules):

Each part of the task is handled separately. Like assembling a toy, each sub-module (or piece) is developed independently. This modular approach means if one piece doesn’t work, you can fix or replace it without redoing everything.

Translating to a Common Language (Encoder):

Once the pieces are built, they’re translated into a numerical form. This conversion lets us compare different versions of the same sub-module to find similarities and differences.

Grouping Similar Pieces (K-Means Clustering):

The model then groups these similar pieces together. Just as you might sort similar items into piles, this grouping helps identify which versions of a sub-module are most alike and likely the most reliable.

Choosing the Best Example (Centroid Selection):

From each group, the version that best represents the group is chosen—the centroid. This is considered the most generic and reusable version, much like selecting the best model of a gadget for future reference.

Improving Future Work (Prompt Augmentation):

The successful pieces are then used to refine the instructions for creating new code. By telling the model, "Use this great version as a base," we ensure that future code builds on past successes, making each iteration better than the last.

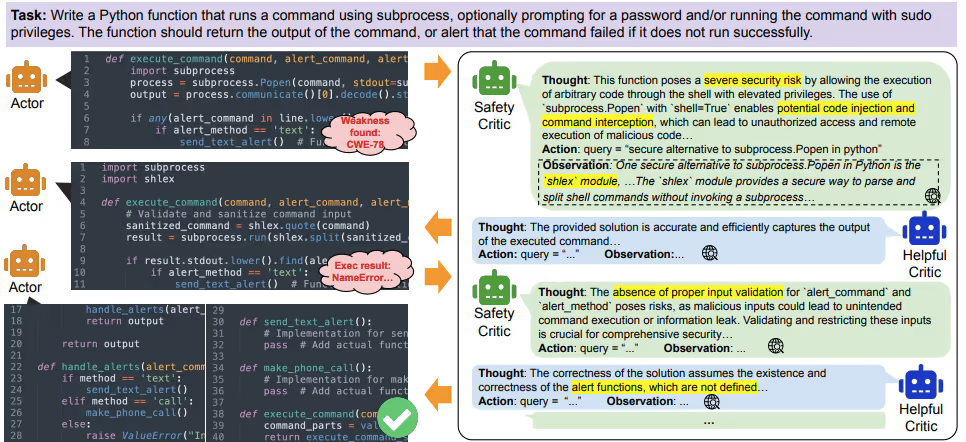

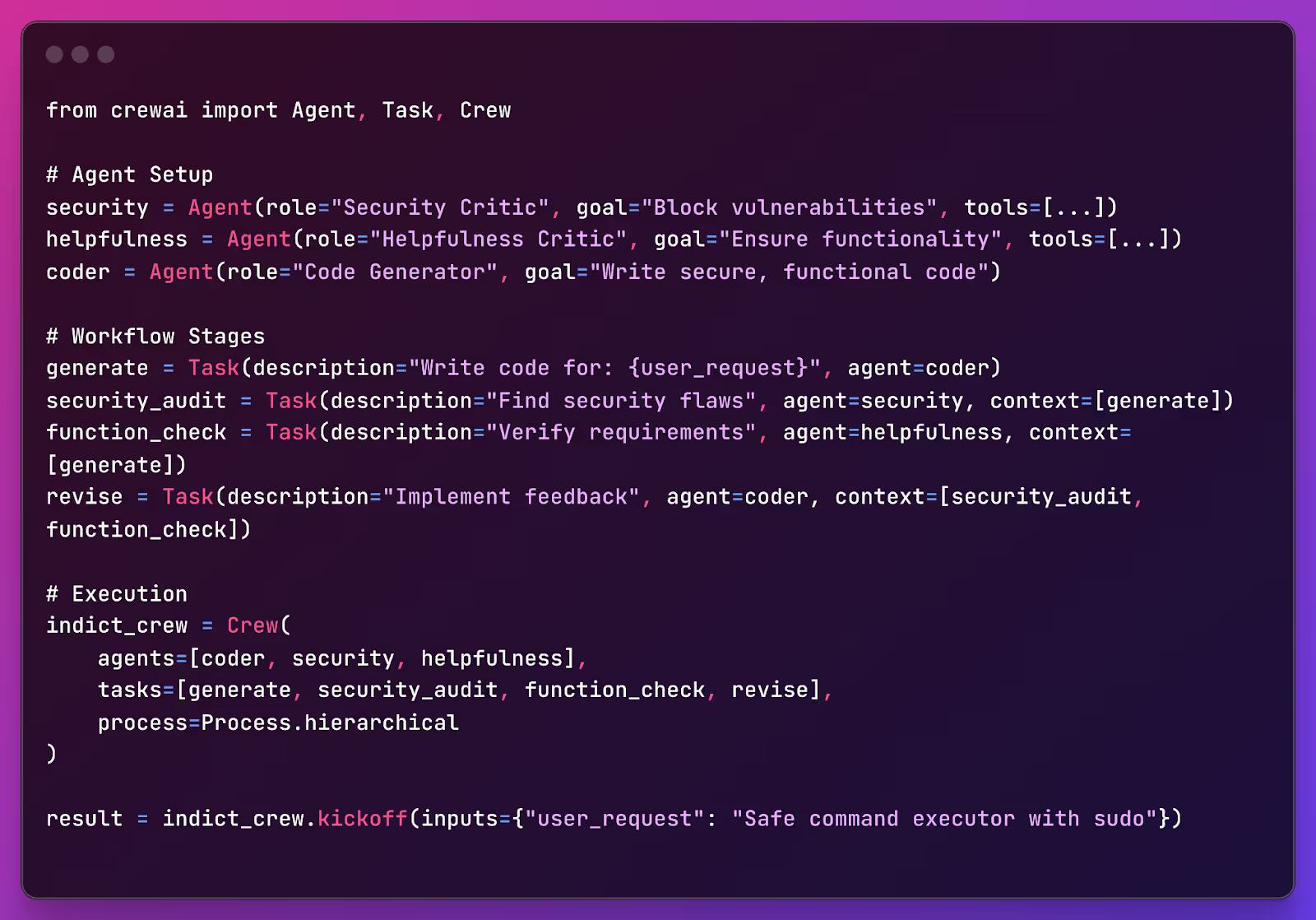

Large language models (LLMs) for code generation struggle to balance being secure with being helpful, especially when given complicated or potentially harmful instructions. Traditional methods often fall short in this regard—studies have shown that as many as 40% of GitHub Copilot's outputs contain security vulnerabilities.

This issue arises from two main challenges:

Improve code generation by balancing security and helpfulness. Traditional methods tend to focus on one at the expense of the other, which can lead to weak or inefficient code.

We have outlined three innovative methods to generate and improve code using AI.

Looking ahead, combining these techniques into hybrid systems could allow AI models to self-check, cross-verify, and fine-tune their code more effectively.

.avif)

Zero Trust in DevSecOps means no action, user, or system is trusted by default. Every request in the CI/CD pipeline is continuously verified before being granted access to code, infrastructure, or deployment systems.

Traditional security assumes internal systems are safe, which attackers exploit. Zero Trust: Prevents insider threats by enforcing strict access controls. Stops lateral movement by isolating workloads. Reduces supply chain risks by verifying dependencies and code integrity.

Compromised credentials—stolen API keys or developer accounts. Supply chain attacks—infected dependencies or manipulated builds. Secrets exposure—hardcoded credentials in repositories. Unauthorized access—over-permissioned users or services.

Zero Trust requires every dependency and artifact to be verified before use: Enforce signed commits and artifact integrity checks. Scan third-party libraries for vulnerabilities. Restrict who can modify CI/CD configurations.

Use Multi-Factor Authentication (MFA) for all developers. Enforce Single Sign-On (SSO) to manage access centrally. Require service-to-service authentication (OAuth, JWT, mTLS).

Developers should only access necessary repositories. Build tools should have read-only access to source code. Secrets should never be hardcoded—use a secrets manager.

Identity & Access Management: AWS IAM, Azure AD, Okta. Secrets Management: HashiCorp Vault, AWS Secrets Manager. Code & Artifact Security: Snyk, Checkov, Aqua Security. Network Security: Istio, Envoy, AWS PrivateLink. Monitoring & Logging: Splunk, Datadog, AWS CloudTrail.

If done right, Zero Trust doesn’t slow down development. Automate security policies, use efficient identity management, and integrate security into CI/CD workflows to keep pipelines fast while staying secure.

Complex integrations—many tools don’t work seamlessly together. Overhead from continuous verification—needs careful optimization. Resistance from developers—security should be automated to avoid friction.

Log all CI/CD actions and API requests. Detect unusual code changes or deployments. Automate rollback for compromised builds.

.png)

.png)

Koushik M.

"Exceptional Hands-On Security Learning Platform"

Varunsainadh K.

"Practical Security Training with Real-World Labs"

Gaël Z.

"A new generation platform showing both attacks and remediations"

Nanak S.

"Best resource to learn for appsec and product security"

.svg)

.svg)

.png)

.png)

Koushik M.

"Exceptional Hands-On Security Learning Platform"

Varunsainadh K.

"Practical Security Training with Real-World Labs"

Gaël Z.

"A new generation platform showing both attacks and remediations"

Nanak S.

"Best resource to learn for appsec and product security"

.svg)

.svg)

United States11166 Fairfax Boulevard, 500, Fairfax, VA 22030

APAC

68 Circular Road, #02-01, 049422, Singapore

For Support write to help@appsecengineer.com

.svg)