Not ready for a demo?

Join us for a live product tour - available every Thursday at 8am PT/11 am ET

Schedule a demo

.svg)

No, I will lose this chance & potential revenue

x

x

As enterprises rapidly integrate agentic AI systems into Security Operations (SecOps), the imperative for robust, scalable architectures becomes paramount. While projections indicate a potential 75% organizational adoption rate for multi-agent AI in threat detection by 2025, the reality is that successful deployment hinges on meticulous design and security considerations. This guide provides a practical blueprint for constructing secure multi-agent AI systems, transforming AI from a potential liability into a formidable security asset.

Traditional single-agent AI systems face challenges like alert fatigue and slow response times. Multi-agent architectures address these through specialized roles, though their effectiveness depends on careful design:

Challenge: A regional hospital network faced misclassified patient records due to AI hallucinations, delaying critical patch deployment by 72 hours.

Solution:

Impact: Accelerated mean time to respond (MTTR) from 18 hours to 2.3 hours while achieving HIPAA/GDPR compliance.

Challenge: A European bank lost $4.8M/month to AI-generated synthetic identities mimicking transaction histories.

Solution:

Impact: Reduced fraud losses by 39% while maintaining <5ms latency for legitimate transactions.

Challenge: Automotive IoT sensors generated 12M false alerts/month, masking a ransomware attack on robotic welders.

Solution:

Impact: Zero production downtime for 180 days post-implementation.

Multi-agent systems thrive on specialization. Each agent should have a clearly defined role to avoid overlap and improve efficiency. However, if these systems aren’t designed with guardrails, they risk becoming high-value targets for prompt injection, data leakage, and more—see the top 5 reasons why LLM security fails to understand these pitfalls in detail.

For example:

These are especially critical in AI-driven architectures where security concerns are outlined in depth in the 2025 OWASP Top 10 for LLMs, offering a clear look at emerging AI-specific vulnerabilities you must design for.

For enterprises pushing the boundaries of AI, it’s not just about avoiding failure—it’s about architecting security from the ground up. Here’s how secure multi-agent AI systems are transforming enterprise SecOps.

Secure multi-agent AI isn’t about perfection—it’s about creating adaptable systems that evolve with threats. By integrating Zero Trust principles, XAI guardrails, and AppSecEngineer’s labs, enterprises can mitigate risks while harnessing AI’s potential.

"Multi-agent AI systems are redefining SecOps by enabling faster incident response without compromising security."

– Dr. Alice Zheng, ML Security Lead @ Microsoft.

"Dynamic access control is the cornerstone of secure multi-agent architectures—it's no longer optional."

– Raj Patel, CISO @ Lockheed Martin.

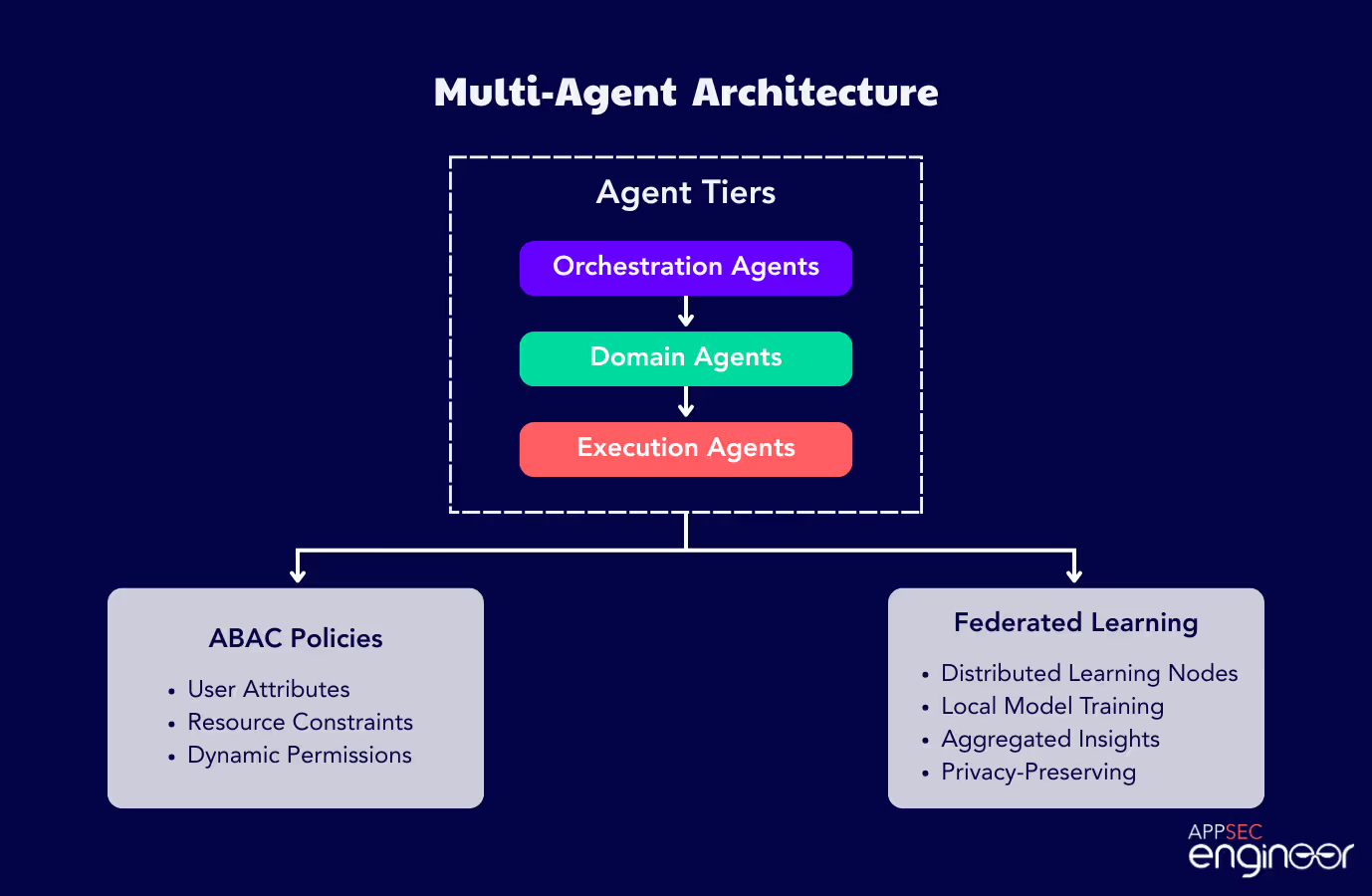

Multi-agent AI architecture refers to a system where multiple specialized AI agents collaborate to perform distinct security tasks—like threat detection, incident response, and threat intelligence. Each agent operates independently but communicates and coordinates with others to reduce alert fatigue, speed up response, and scale security operations.

Single-agent systems often lack context, generate high false positives, and can’t scale across diverse threat surfaces. Multi-agent setups reduce detection noise, enable faster incident response, and bring specialization—making them more efficient for real-world SecOps use cases like fraud detection, ransomware response, or anomaly detection in OT environments.

Prompt injection and model manipulation Data leakage across agents Over-privileged access Lack of explainability in AI decisions Insecure inter-agent communication These risks demand layered controls including ABAC, mutual TLS, zero trust policies, and explainable AI (XAI) implementations.

Use TLS 1.3 for encrypted data-in-transit. Implement mutual authentication using certificates or OAuth2. Monitor traffic between agents using Intrusion Detection Systems (IDS). Enforce strict namespace and API access controls in platforms like Kubernetes.

Healthcare – To reduce AI hallucinations and secure patient data workflows. Finance – For fraud detection (e.g., synthetic identity attacks). Manufacturing – To manage massive sensor data and prevent cyber-physical sabotage. Use cases are expanding rapidly in energy, telecom, and government sectors as well.

CrewAI – For multi-agent workflow management. JADE / SPADE – Open-source platforms for agent-based systems. TensorFlow Federated – For privacy-preserving distributed training. Kubernetes – For scalable deployment and lifecycle management. Open Policy Agent (OPA) – For implementing ABAC access control. SHAP / LIME – For AI explainability in decision-making.

Attribute-Based Access Control (ABAC) adjusts permissions dynamically based on context (agent behavior, task, data sensitivity). It reduces the attack surface by enforcing least privilege access in real time—especially important when agents operate autonomously.

Using Explainable AI (XAI) techniques like: SHAP for understanding feature influence. LIME for explaining individual decisions. Counterfactuals to show how inputs could change outputs. These help security teams audit, debug, and trust agent actions, which is essential for compliance and incident response.

Use simulation tools like: SPADE – For communication-heavy scenarios. GAMA – For modeling spatial interactions and large-scale simulations. Conduct fault injection, workload spike tests, and inter-agent conflict simulations to ensure system resilience.

Healthcare (HIPAA): Implements data minimization, encryption, explainable AI for decisions, and full audit logging. Finance (PCI DSS): Uses tokenization, ABAC, full data encryption, and traceable logs of all payment-related agent actions. These controls map directly to compliance mandates and reduce audit overhead.

.png)

.png)

Koushik M.

"Exceptional Hands-On Security Learning Platform"

Varunsainadh K.

"Practical Security Training with Real-World Labs"

Gaël Z.

"A new generation platform showing both attacks and remediations"

Nanak S.

"Best resource to learn for appsec and product security"

.svg)

.svg)

.png)

.png)

Koushik M.

"Exceptional Hands-On Security Learning Platform"

Varunsainadh K.

"Practical Security Training with Real-World Labs"

Gaël Z.

"A new generation platform showing both attacks and remediations"

Nanak S.

"Best resource to learn for appsec and product security"

.svg)

.svg)

United States11166 Fairfax Boulevard, 500, Fairfax, VA 22030

APAC

68 Circular Road, #02-01, 049422, Singapore

For Support write to help@appsecengineer.com

.svg)